Tracking the pricing trajectory for large language model APIs

Large language models (LLMs) like ChatGPT and GPT-4 might currently be at the center of the conversation but many developers feel that they’re still too expensive for their use cases. We share our thoughts and predictions on the future of LLMs and their pricing trajectories.

We joke at Inworld that if you don’t like the price of an LLM, wait a month.

Indeed, the cost of running ChatGPT for OpenAI is already down 90% since its initial release in December 2022 and the company has passed along those cost reductions in their pricing. In this piece, we’ll take a look at LLM APIs and share our thoughts and predictions on the future of LLMs and their pricing trajectories.

Current Commercial LLM APIs

Starting with Google’s groundbreaking 2018 release of BERT, we’ve seen a rapid scaling up of LLM parameters and capacity from BERT’s 110 million parameters to the recently released GPT–4 which is rumored to dwarf GPT-3’s 175 billion parameters.

But an overview of the current and future state of LLM APIs shows that while OpenAI has recently been dominating the sector, we’re on the cusp of a much more competitive ecosystem.

OpenAI models

The current commercial LLM APIs on the market are primarily offered by OpenAI:

- GPT-4

- GPT-4 -32k

- ChatGPT (GPT-3.5 Turbo)

- GPT-3.5 Davinci

- GPT-3 Curie

- GPT-3 Babbage

- GPT-3 Ada

Other popular models

However, major tech companies like Google and AI players like Cohere and Anthropic AI also have their own commercially available models.

- PaLM (Google)

- Claude (Anthropic AI)

- Generate (Cohere)

Forthcoming models

There are also several AI startups currently creating general purpose large language models including Hugging Face, AI21 Labs, and others, as well as a number of companies who are developing models around highly specific use cases such as medical or enterprise applications.

What does more competition for LLMs mean?

The fact that LLMs are set to be a richer and more competitive space in the near future means metered costs are likely to fall considerably with more options available.

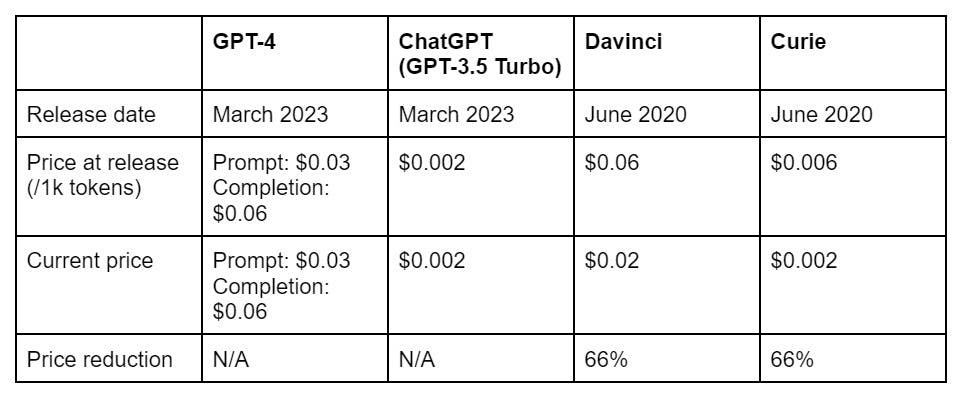

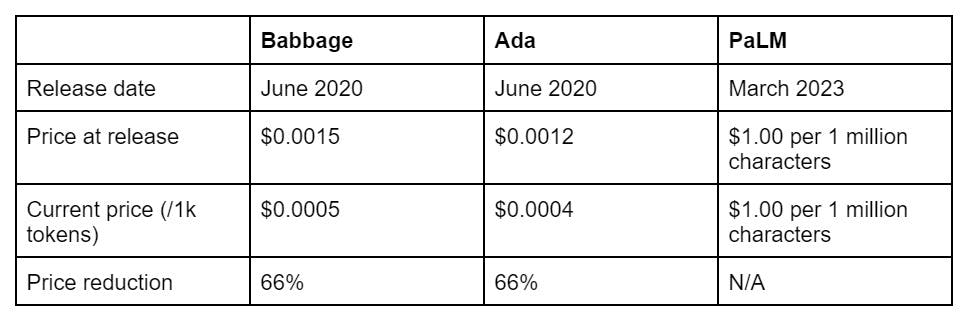

As you can see in these charts below, the costs have already come down dramatically since the launch of many existing models.

Cheaper LLM APIs are coming for other reasons, too

In addition to a more competitive LLM ecosystem pushing prices downwards, the trajectory of LLM research and development is headed in a number of exciting directions that will result in significant cost reductions.

We’ll soon see LLMs become cheaper and faster – to the point that they’ll be implemented in far more applications and devices than is technically possible or makes financial sense today. Here are the six trends that will bring that about:

- Cheaper and more powerful ML hardware

- Distilled models

- New solutions to hallucinations

- Smaller models

- Niche models

- PCs, phones, and tablets optimized for AI

1. Cheaper and more powerful ML hardware

TL:DR - Training models are about to become much cheaper and more efficient

The most recent wave of machine learning advancements began with NVIDIA GPUs that were optimized for 3D graphics. Now, NVIDIA and a number of other companies are in a race to build the future machine learning tech stack.

This includes Google, which introduced their Tensor Processing Units (TPUs) in 2016, Amazon Web Services, which introduced Inferentia in 2019 and Trainium in 2020, as well as companies like Habana, Graphcore, Cerebras, and SambaNova. More hardware innovations will improve price-to-performance and lead to price reductions for hardware while speeding up training and inference. The result? The cost of future models will fall.

2. Distilled models

TL:DR - Models can be distilled without affecting accuracy to reduce costs and latency

Distillation is a process where you remove parts of the model and teach it to reproduce the outputs of a bigger model. This strategy was validated in 2019 with DistilBERT retaining 97% of BERT’s language understanding capabilities with a model that’s 40% of it’s size and which runs 60% faster.

Distillation reduces things like latency, hosting costs, and GPU costs for the model developer, savings that can then be passed along to API customers.

3. New solutions to hallucinations

TL:DR - New ways to solve for hallucinations would lead to smaller models

Currently, AI models can’t avoid hallucinations and factual inaccuracies entirely. To solve for hallucinations, LLM developers have focused on costly mitigation strategies like scaling data sets, adding parameters, and fine tuning. But, while those things reduce how often hallucinations happen, hallucinations are ultimately part of a fundamental problem in the architecture that isn’t solvable with current solutions.

Google admitted as much in 2022 when they published a paper on their LaMDA model. They wrote that while scaling improves response accuracy, it doesn’t eliminate hallucinations entirely and adds additional costs. The future will see new innovations to help tackle this which will lead to changes in how models are designed and built, resulting in decreases in both price and latency.

4. Smaller models

TL:DR - ML research is moving towards smaller models

Advances in training processes will lead the industry to focus on building smaller models. Smaller models can offer significant cost and latency improvements with minor degradation or even improvements in quality. As early as 2016, SqueezeNet was able to achieve better accuracy than AlexNet with 50x fewer parameters and a model size of under 0.5 MB. Similar results have been replicated in other types of models.

That will give devs far more choice around LLM APIs in the future. It will also make LLMs accessible for a variety of use cases that they’re currently too expensive to deploy in.

5. Niche models

TL:DR - Niche models will lead to smaller models optimized for specific use cases

Rather than continued growth in general purpose LLMs like those developed by OpenAI, expect to see a growing market for niche models for specific use cases where depth of training data is more important than breadth. Currently, a general purpose LLM can get you 80% to where you need to go but niche models are going to be able to get you that final 20%.

While some niche models might be more expensive due to the need to license costly datasets, the majority of niche and smaller models will result in cost savings over larger general purpose models. That added competition will push down the cost of general purpose models in kind.

6. PCs, phones, and tablets optimized for AI

TL:DR - The consumerization of machine learning is on the horizon

The future of generative AI will be consumerized. In just a few years, expect individuals to be able to create their own small, high quality, and cost-effective models that they can fine-tune for their own tasks or train with their own data. Currently, a key impediment to doing so isn’t just the size of models but also the capabilities of consumer hardware like laptops, phones, and tablets. But the next generation of consumer electronics will be optimized for generative AI with more RAM, special transformer cores, and additional memory. That’s because the industry believes consumer models will soon be economically viable.

Takeaways about future pricing

Expect more cost reductions

All indications point to significant cost reductions for LLM APIs in the near future. Aside from the many industry factors laid out above that will drive down pricing, OpenAI’s own history speaks to a future of steady price decreases. Since GPT-3 was launched in 2020, their prices have gone down 66% and, in just three months, ChatGPT’s costs are down 90%.

We’re seeing the same thing with our costs at Inworld. Since launching our studio for AI characters in 2021, our prices have gone down 90%. As we find cost efficiencies, our goal is to pass them along to our customers.

But also know that consumers are willing to pay...

It's important to remember that developers building with AI now are not just building at the bleeding edge -- they're also building for a market willing to pay. For example, our Future of NPC report found that 81% of gamers were willing to pay more for a game that had Inworld NPCs in it. How much more? An average of $10 per month more with 43% willing to pay $11 to $20 more per month.

For that reason, LLMs – and value-added solutions like Inworld that optimize for personality-driven experiences with added features and orchestration – present a significant revenue opportunity even at today’s prices. Learn more about how to decide whether to use a LLM model API directly or a value added provider like Inworld here.

Interested in trying out Inworld’s 20+ machine learning models and value-added features? Test out our Studio today for free!