Mutations, Goals, and Multi-Character in our GDC Ambassador demo

I wanted to share a bit about my recent work as technical lead on Inworld’s GDC Quinn Ambassador demo, which was designed to be the central experience at our GDC booth this year. Quinn’s (our AI ambassador) job is to welcome conference attendees to our booth and suggest other Inworld demos you might be interested in – that is, if you can distract her from playing Street Fighter 2 with her buddy. ;)

The Quinn Ambassador demo has two scenes: The first uses Inworld’s Multi-character feature, where two characters are chatting while playing video games. The second shows Quinn asking the player questions and leveraging Goals and Mutations to transform into different versions of herself.

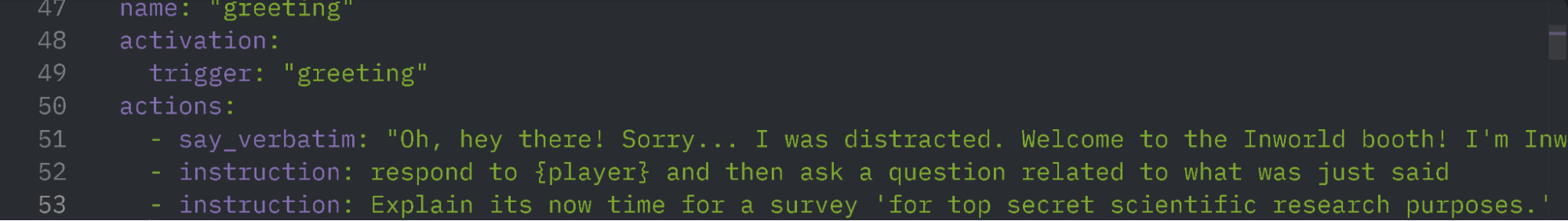

The first scene depends heavily on topic injection within Inworld Studio to program the brain, ensuring that both characters remain focused on the scene they are in. We inject topics such as "discussing the enjoyment of shouting 'Hadouken'," "exploring attempts to replicate the Ryu signature uppercut in real life," and "examining Guile's mastery of the sonic boom," while also ensuring that their brains do not repeat topics. We tune them to effectively engage in playful banter and sustain a somewhat realistic and humorous conversation while playing Street Fighter 2.

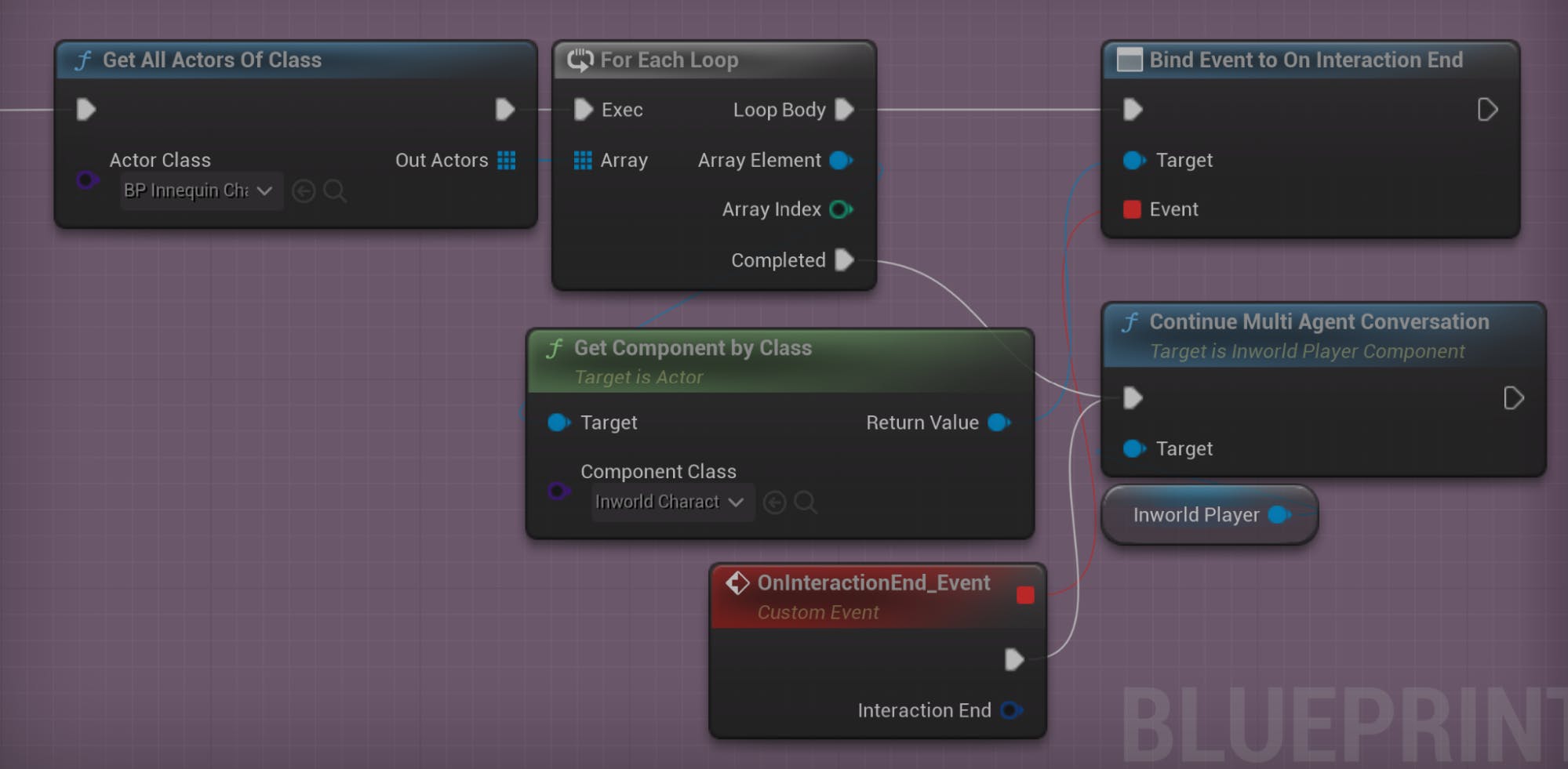

In Unreal Engine, I employed a key technique for facilitating multi-character banter. This involved binding to the OnInteractionEnd event of each character. When this event is triggered, it invokes the continueMultiAgentConversation function. This keeps the conversation flowing smoothly and allows each character to finish before the other jumps in.

At our GDC booth, attendees can press a button to engage with Quinn. This engages a scene where Quinn sets aside her controller, stands up, and walks to her handcrafted cardboard booth to talk to you. Quinn will ask you a series of questions, and based on your responses, she begins transforming into different characters.

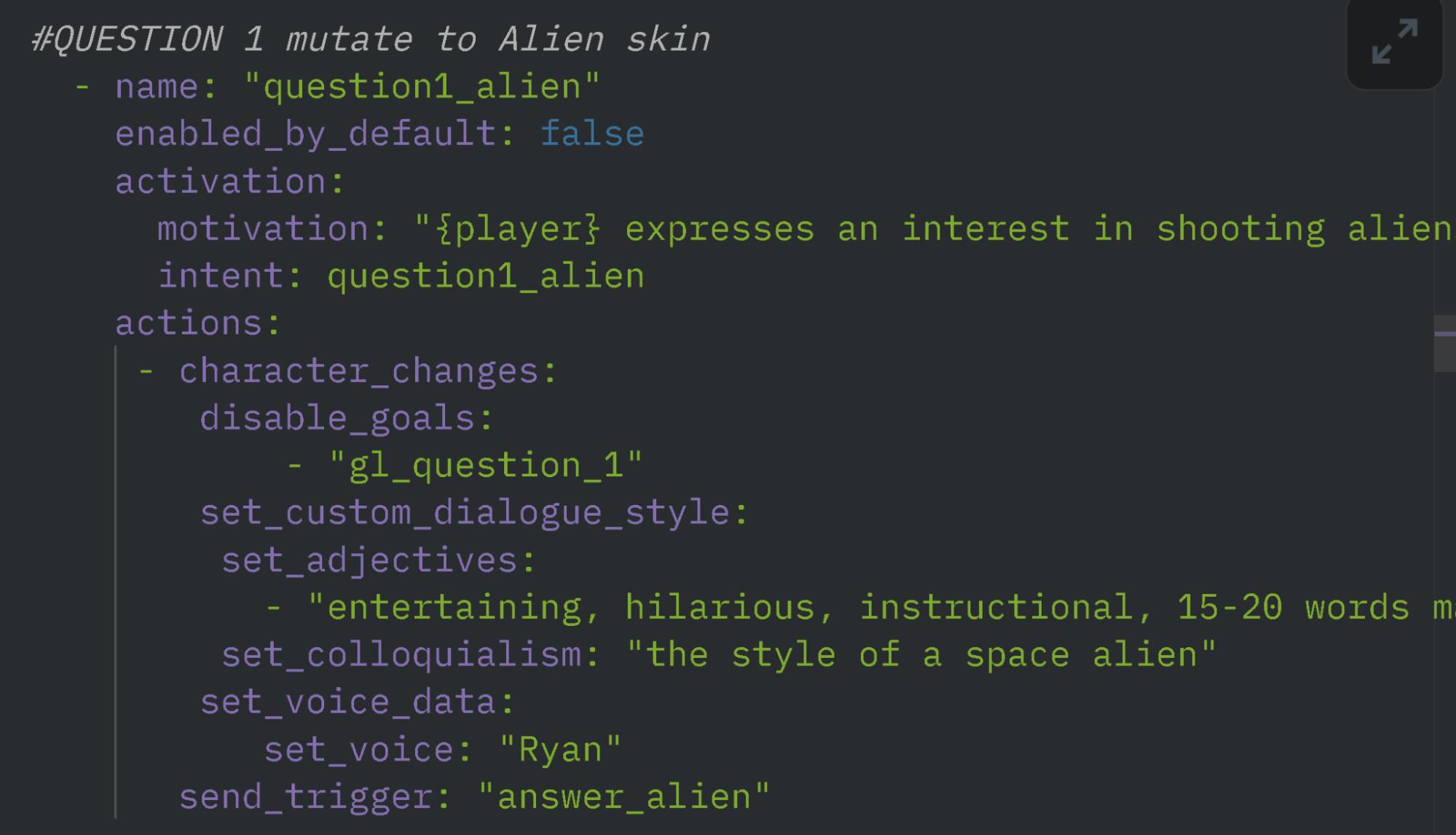

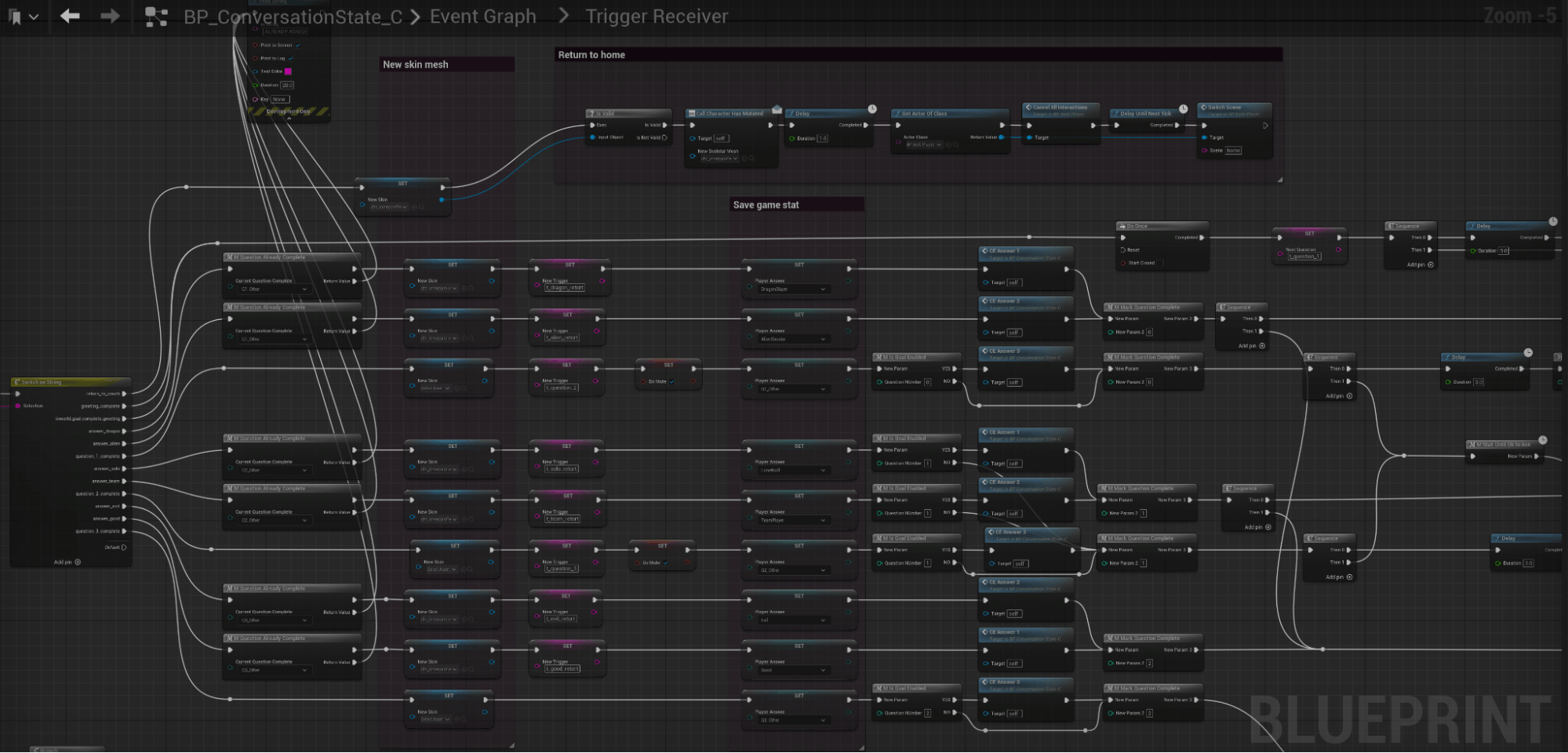

Much of this process involved animation and Niagara VFX in Unreal Engine, which I had a lot of fun creating. Under the hood, everything is controlled through Inworld's Goals and Mutations features, which are configured server-side using Inworld Studio. Whenever a question is asked, the response triggers a new goal written in YAML and initiates a character mutation, altering Quinn’s personality, language, colloquialisms, and voice. The voices are powered by Inworld Voice 2.0, which sound amazing and have very low latency.

Based on your responses to Quinn's three questions, she will analyze your answers and guide you to one of the other eight demos at Inworld's booth to visit.

As you’re answering questions, Quinn drops a billboard to give you options for responding. We wanted to time it so that the billboards dropped precisely when our character spoke specific phrases. It was challenging in Unreal, but we managed to capture the utterance just before the character's speech and parse it for those keywords. We then inserted these keywords into line-by-line goal instructions on the Studio side (in YAML), surrounding them with single quotes to ensure their exact utterance.

Our goal was to create a highly interactive experience by incorporating animations, VFX, sound effects, new questions, and calculated responses triggered by various events at precisely the right moment. This resulted in a complex network of delays and timings, but I plan on updating that in our SDK to make life easier for our developers. All things considered, I’m really proud of what we were able to accomplish in a short amount of time!

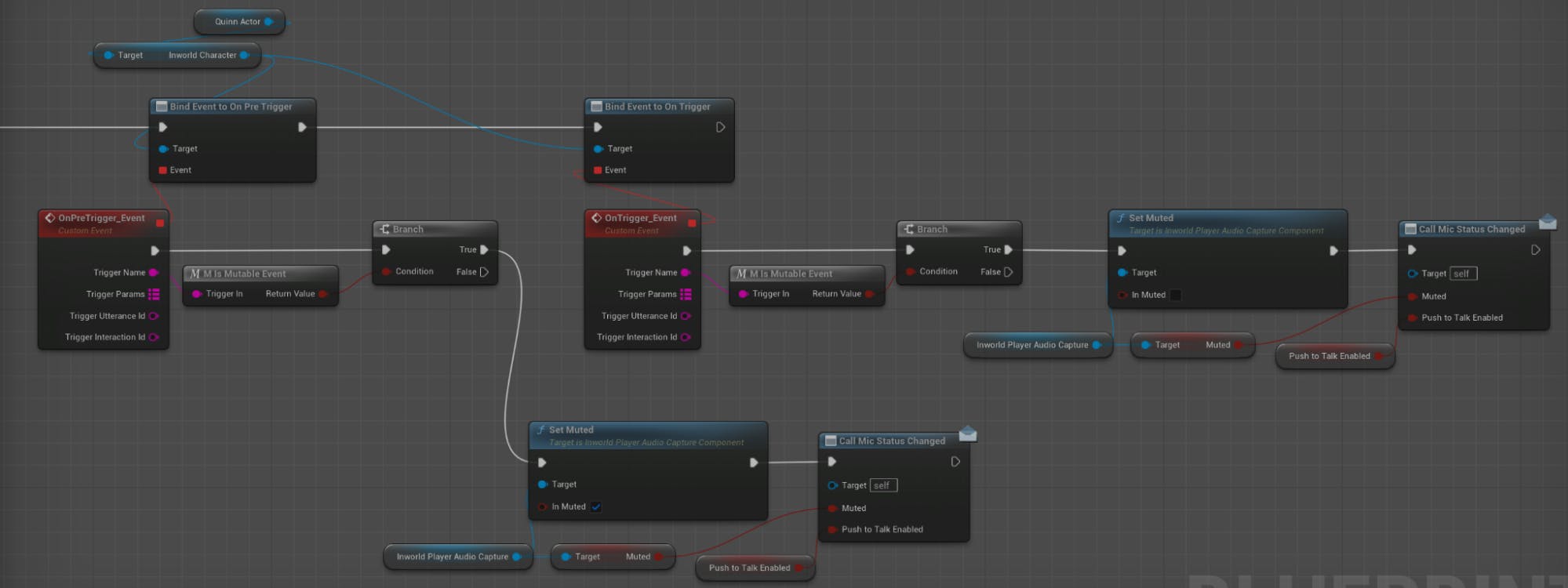

For this experience, we wanted to prevent attendees from interrupting Quinn’s questions, so I devised and implemented a new pre-trigger event in our SDK. I introduced a mechanism to mark YAML instructions as non-interruptible. The solution involved sending an OnPreTrigger event to Unreal Engine (or any client-side SDK) before executing the instruction, followed by the standard OnTrigger event afterward. While this implementation may not represent the final external API, it spurred us to explore ways to enhance the trigger API for achieving precise timings in Unreal Engine. This improvement empowers developers to creatively orchestrate events at the right moments during interactions with AI agents.

I had a blast and learned a ton building the Quinn Ambassador demo. You can’t miss the 12’ tall tower at our GDC booth P1615, so please stop by and let me know if you have any questions or feedback!